Deployment of a Single AWS Workload for a New AWS Customer

By Steve Kinsman, 21 February 2024

Country: Australia

Executive Summary

Akkodis was tasked with deploying an existing AWS workload to a public sector department that was not previously an AWS customer. What made this case unusual was that, although this agency needed to use the workload, they did not have the staff to manage it.

As a result, the department was adopting AWS with the workload in short order, without any in-house staff training. Their goal was to see the workload deployed as cost effectively as possible, while making it maintainable and amenable to outsourcing. This influenced many architectural decisions.

When setting up a new AWS Organization for a client, Akkodis always follow AWS best practice, and consider how to make it scalable for future needs. However things were more complicated in this case as there was a need to also ensure that the moderate workload costs weren’t overwhelmed by AWS infrastructure costs and overheads.

Challenges

The client needed a new AWS Organization set up for the workload, following AWS and DevOps best practices and allowing for future expansion, while respecting the client’s desire to minimise infrastructure costs.

Strategy

The overall deployment strategy was to utilise the latest best-practice AWS services and configuration wherever possible for setting up accounts, networking and security. However, constraints on infrastructure costs meant that some best practices needed to be adjusted, and alternative remediation implemented.

The ability to scale out for future additional workloads was included as much as possible, limited only where it would incur significant extra cost. DevOps support for additional workloads was included by making this support generic. DevOps best practices such as CI/CD pipelines and separation of Development, Test, UAT and Production workloads into individual accounts were included.

Solution

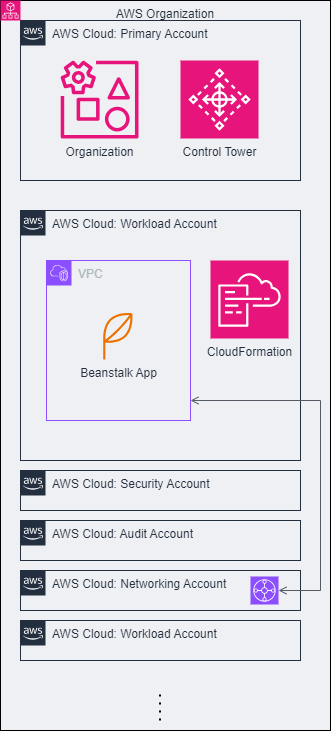

AWS Accounts

The AWS Organization and core accounts were set up as a Landing Zone using AWS Control Tower and the Landing Zone Accelerator. Control Tower provides a standard, well-architected, cloud environment of AWS accounts (a “Landing Zone”), governance and security controls, and an Account Factory for creating and deploying new accounts into the Landing Zone.

The Landing Zone provides a baseline to get started with multi-account architecture, IAM, governance, observability, data security and logging. Controls are high-level rules that provide ongoing governance for the overall AWS environment; there are preventive, detective and proactive controls configured.

The Landing Zone Accelerator was deployed in this environment. It provided a jumpstart for setting up a secure, well-architected AWS environment by provisioning a foundational landing zone. It established a baseline multi-account structure, policies, and guardrails based on AWS best practices and alignment with global compliance frameworks. It also provided an effective way to add account customisations such as controls and security services.

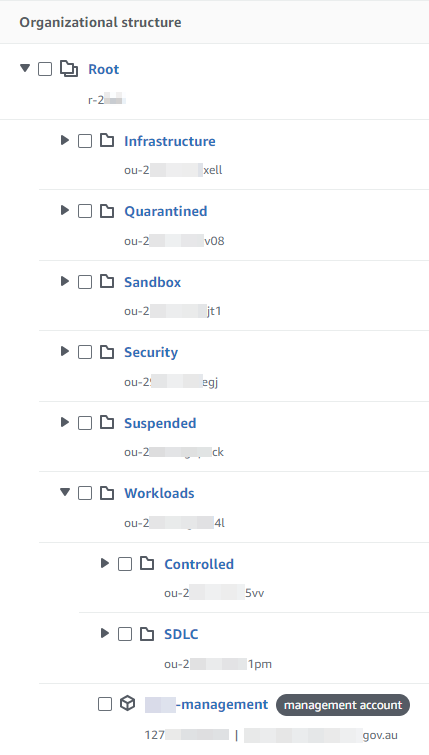

An Organizational Unit (OU) hierarchy was set up to suit the core and workload accounts and the ability to apply Service Control Policies (SCPs) across appropriate groups of accounts. The main top-level OUs are for Infrastructure, Security and Workloads. The Workloads OU contains OUs for Controlled workload accounts (Production and UAT) and Software Development Lifecycle, or SDLC accounts (Test and Development).

Security

Control Tower was used to set up and manage account access via AWS IAM Identity Center as it automates the setup of IAM roles, users, and groups within member accounts, enforcing standardised IAM configurations. It streamlines the onboarding process for new accounts and ensures that IAM best practices are followed consistently.

In addition to the mandatory controls in Control Tower, the strongly-recommended ones were also enabled as they are designed to enforce some standard best practices for well-architected, multi-account environments. Elective controls were enabled selectively to meet non-functional requirements.

AWS CloudTrail was set up at the Organizational level to aggregate information from all accounts. This simplifies setup and management, allowing consistent and standardised CloudTrail configurations automatically deployed by Control Tower which ensures it’s enabled by default in all member accounts.

Other AWS security services enabled include AWS Config, Security Hub and Trusted Advisor.

Networking Design

Control Tower can perform VPC setup along with supporting network resources in each account, applying standardised automation and security. However, customisation options are limited; in this case the most suitable network architecture wasn’t supported by Control Tower, so network setup was done outside of Control Tower.

The main issue here was minimising network gateway costs while allowing IPv4 outbound internet access from many accounts in the Organization. NAT Gateway instances in each account’s VPC would be too expensive, as would linking those VPCs via Transit Gateway to enable sharing of NAT Gateways. So instead, a single Shared VPC across accounts was deployed.

The VPC was created in the “shared-prod” account and its subnets individually shared to other accounts. This doesn’t alter any of the normal security boundaries - each account still has ownership of the resources it deploys in the VPC and can decide what other accounts have access to them. As with any VPC the implicit routing allows full communication between all subnets. Selective network isolation was achieved using NACLs, preventing for example communication between the environment tiers (Development, Test, UAT and Production) of workload accounts. This was facilitated by sharing different subnets to each account rather than allowing accounts to mingle their resources in the same subnets as another account.

Scaleability can be a concern with this approach – ensuring the design can scale out to more workloads and eventually support switching to a more traditional approach or hybrid approach if needed. The VPC included a large /16 IPv4 CIDR block, allowing for lots of subnets of a reasonable size. Each account was furnished with 12 subnets, consisting of 3 tiers across four availability zones:

- Public subnets tier

- NAT subnets tier (outbound internet only)

- Private subnets tier.

Subnets were given /25 IPv4 CIDR blocks so there’s room for 512 of them in the VPC. This allows for significant future growth of accounts with VPC access beyond the initial six.

Networking DevOps

Networking resources were deployed using CloudFormation. Several templates are involved because the Shared VPC creates more deployment scenarios than usual:

- Resources deployed one-off in shared-prod, e.g. the VPC itself.

- Resources deployed per-account but in shared-prod, e.g. subnets; build pipelines were used for this.

- More resources deployed one-off in shared-prod but needing to be done after subnets are created.

- Resources deployed in each account, e.g. EC2 Instance Connect Endpoints; a service-managed StackSet was used for this.

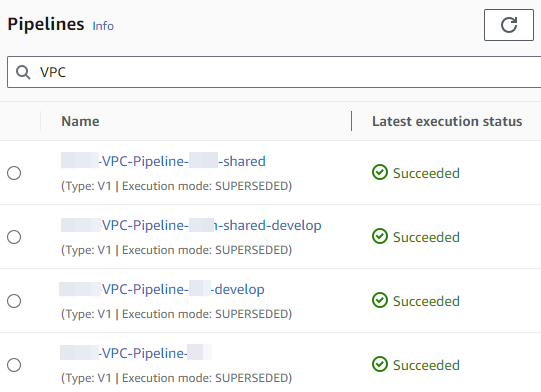

The build pipelines mentioned above were structured as follows. Firstly they were divided into pipelines for each domain; initially one for the shared accounts and one for the workload domain but more workload domains may be added. For each domain there are “develop” and “main” pipelines as described in the following section. Each pipeline was instantiated via a CloudFormation Stack, and the same template was used for each Stack with only Stack parameters varying.

CI/CD Pipelines

Pipelines such as the ones described above for network setup were based on the approach described in the “Secure Cross Account Continuous Delivery Pipelines using AWS CodePipeline” case study done for another client. In summary, a centralised approach was adopted where CodePipeline instances and common resources are managed from a “Tools” account, in this case the shared-nonprod account. Supporting cross account roles were also created which allows AWS CodePipeline to assume those roles and perform deployment actions within target accounts.

Pairs of build pipelines are used where a “develop” pipeline is triggered by commits to the “develop” branch in the corresponding CodeCommit repository, and a “main” pipeline is triggered by commits to the “main” branch. The former pipeline deploys to Development and Test accounts, and the latter pipeline to UAT and Production accounts.

Outcome

The client now has taken responsibility for the AWS workload in a new set of AWS accounts set up as a best-practice Landing Zone while keeping infrastructure costs to a minimum. This environment is strong beginning for the client to begin their AWS journey, and allows for significant expansion using the current architecture. The creation of resources using DevOps approaches helps enable the small cohort of staff to achieve more effective and uniform management over time, helping start some of the transformation of the organisations “run” capability.